← Back to Main

Proposal - The Innovative-Interactive-Immersive-Inspirational Metaverse

7/16/2024, 6:37:16 PM | Jeanyoon Choi

Original Notes (Pre-LLM)

The Innovative-Interactive-Immersive-Inspirational-Intelligent-Imaginative-Inclusive-Integrated Metaverse: Proposal to

Immersiveness

How to reconstruct HMD-driven immersiveness within mobile environment

Pointing, Pinching, Zooming → Scrolling, Pinching, Zooming

Ian Cheng-like Interactions?

Dadaistic representation/reconstruction of Immersiveness within Mobile

Metaverse: Criticism? Via Repetition & Exaggeration?

Just like Portfolio Website

The original Idea: How to bring Interactive Immersiveness within the Multi-Device Web Artwork setting? How to reconstruct HMD-Driven immersiveness within mobile environment? Pointing, Pinching, Zooming (Apple Vision Pro interactions) will act as Scrolling, Pitching, and Zooming? (Within Mobile) Also can this act as a dadaistic representation of the criticism of how everyone talks about metaverse - before even doing it seriously?

The Innovative-Interactive-Immersive-Inspirational-Intelligent-Imaginative-Inclusive-Integrated Metaverse is a Multi-Device Web Artwork developed to expand the means of interactive immersiveness beyond traditional HMD/VR/AR setups while critiquing the exaggerated promises of future technologies. To reflect this critique, this residency project revisits the fundamental principles of interactive immersiveness, aiming to deepen our understanding of virtual worlds through medium-based research.

The project uses five channels: four screens (monitors/laptops) and one mobile. The four screens are positioned perpendicularly around the audience to create a 360-degree immersive environment. Unlike HMDs, where the screen is attached to the eyes, these screens surround the body, enhancing the real-world setting. The audience interacts with the environment through their mobile phones by scanning a QR code to access a customised website, which is connected to the screens via WebSocket in real-time. Mobile interactions control the content on the four screens simultaneously, similar to the relationship between a VR Headset and its controller. The phone’s orientation, tracked via its accelerometer, changes the camera rotation of the virtual world, while touch interactions on the mobile UI navigate the virtual world, acting like thumbsticks.

This setup offers a unique experience distinguishable from traditional HMD setups, by breaking down existing norms and practices in constructing VR worlds and situating them within a multi-device web artwork. Thus, it provides an opportunity to reflect on the notion of interactive immersiveness within the virtual world to all developers, designers, artists, and audiences. Simultaneously, it presents the foundation for a new medium beyond traditional VR/AR, presenting somehow more accessible and immersive format. While this multi-device web artwork can be categorised as augmented reality, it is distinguishable from web-based single-device AR.

The Innovative-Interactive-Immersive-Inspirational-Intelligent-Imaginative-Inclusive-Integrated Metaverse, as indicated by its name, critiques the flashy adjectives associated with future technology. During the pandemic and beyond, we have seen how myopic capitalism is linked with illusory promises of yet-to-be-realized technologies, packaged with inflated entrepreneurship and techno-utopianism. This project criticises such situations, arguing for a more steady, detailed, and professional approach to each technology, similar to how this project deeply researches the notion of interactivity from an artistic perspective.

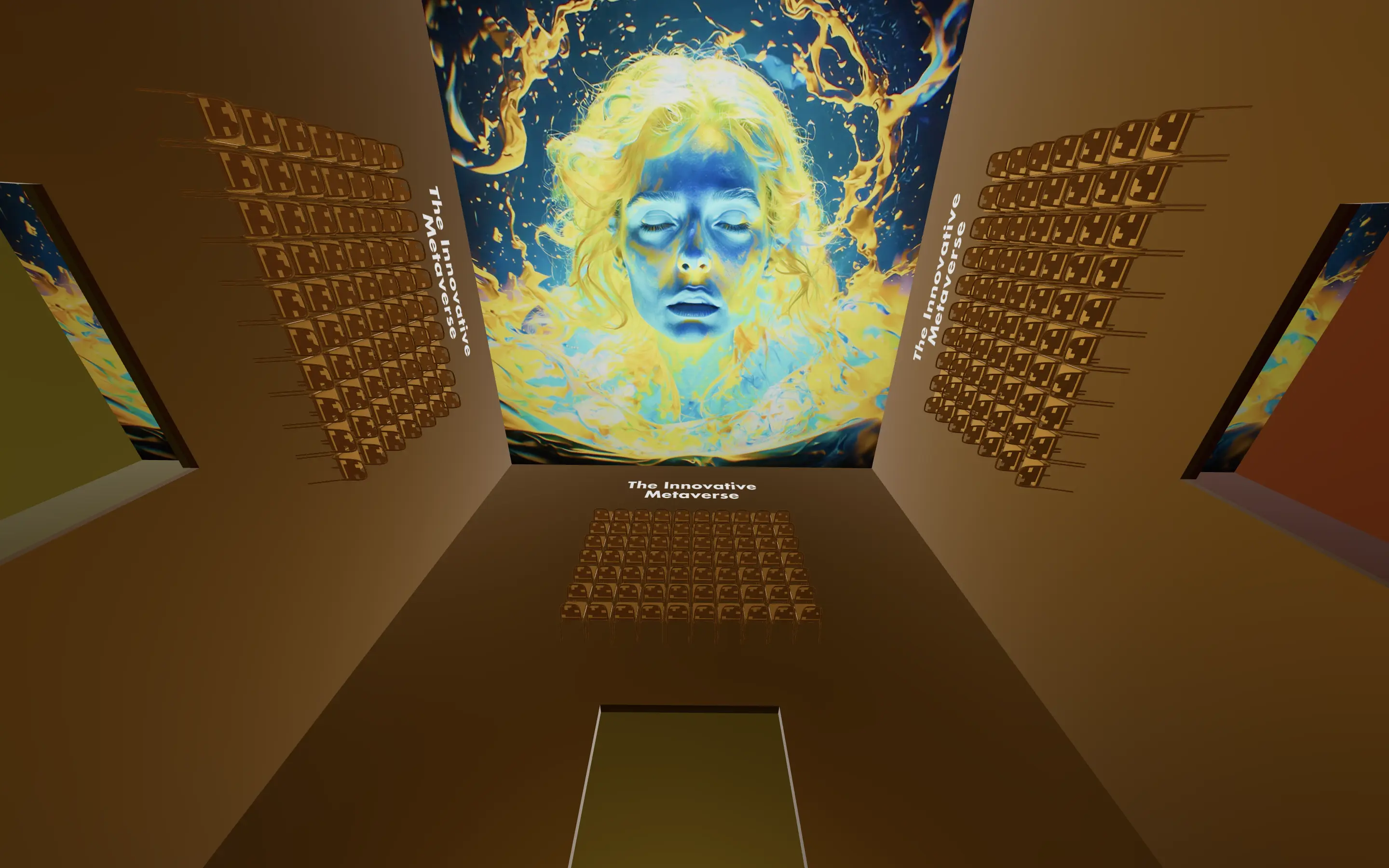

The virtual world structure for this project includes about 400 conference rooms arranged in a grid on the XY-plane, with 20 rooms along the X-axis and 20 along the Y-axis. Each row of the X-axis represents an 'I'-starting adjective (Interactive-Immersive-Inspirational-Intelligent, etc.), while each column of the Y-axis represents a technology (Blockchain-NFT-Web3, etc.). Each conference room at the intersection of a row and a column has a unique conference agenda (e.g., The Immersive Metaverse). As the audience enters a room, a conference begins, with chairs arranged towards the top, and Stable Diffusion-generated images/videos presented at the ceiling mimic the professional, futuristic aesthetic often presented by technocrats. Additionally, ChatGPT and TTS-generated spatial audio present the unfulfilled promises of future technologies. The exact content will be further designed during the residency period.

The full word list includes Interactive-Immersive-Inspirational-Intelligent-Imaginative-Inclusive-Integrated-Intriguing-Intuitive-Infinite-Innovative-Invigorating-Inventive-Involving-Illuminating-Illustrative-Impactful-Insightful-Illuminative-Interconnected-Interdisciplinary-Independent-Ingenious-Industrious-Introspective-Blockchain-NFT-Web3-AI-VR-AR-MR-IoT-BigData-MachineLearning-Metaverse-Cybersecurity-5G-QuantumComputing-EdgeComputing-CloudComputing-Robotics-Automation-Biotech-FiTech-SmartContracts-DigitalTwins-AugmentedReality-ExtendedReality-WearableTechnology-3DPrinting-Genomics-Nanotechnology-SmartCities-GreenTechnology-AutonomousVehicles-DistributedLedger-ArtificialGeneralIntelligence-NaturalLanguageProcessing-DeepLearning.

Technically, this project uses Next.js and React.js for the frontend framework, with the virtual world constructed via Three.js. Inter-device interaction is implemented via WebSocket, allowing real-time control of the monitor scenes from mobile interactions.

English Version (LLM-Generated)

The Innovative-Interactive-Immersive-Inspirational-Intelligent-Imaginative-Inclusive-Integrated Metaverse or I^8 Metaverse is an avant-garde artwork, serving as a critique of future technology and its grandiose promises. The proposal aims to rethink the concept of Immersive interactivity transported into a Multi-Device Web Artwork setting not limited to the constraints imposed by head-mounted display (HMD). The experiment attempts to mimic actions of Pointing, Pinching, and Zooming within the mobile environment.

The I^8 Metaverse, an elaborate web-based design, includes monitors and mobile devices. It surrounds the audience with four screens, creating a 360-degree immersive experience, unlike the traditional Virtual Reality (VR) or Augmented Reality (AR) setup where the user is essentially glued to the screen. The audience is invited to participate in this digital world by scanning a QR code using their mobile devices. Upon scanning, they gain access to a website that controls the content on the additional screens in real time.

This project uniqueness lies in deconstructing and reimplementing standard norms and practices of creating virtual worlds, providing an alternate way of experiencing immerse interactivity. It serves as a stepping stone towards a new platform, extending the limits of conventional VR or AR. The I^8 Metaverse provides an insightful critique on seemingly cutting-edge futuristic technologies.

The immersive virtual world structure revolves around around 400 conference rooms lodged in a grid format. The rooms intend to represent adjectives beginning with 'I' and various emerging technologies. Each room is dedicated to a specific agenda of merging an adjective with a technology. The audience is welcomed to immersive, professional conference-style discussions coupled with digitally generated images/videos and spatial audio.

This avant-garde piece uses Next.js and React.js frameworks for its frontend development and Three.js for building the virtual world. True real-time interaction between devices is enabled through WebSocket technology. The project invites all to experience a new approach to interactive immersiveness and critique the hyped jargon of futuristic technology.

Korean Version (LLM-Generated)

혁신적인 대화형 몰입형 영감주는 지능적 상상력 풍부하고 포괄적이며 통합된 메타버스: 제안서

목표로 하는 것은 특이점을 지향하는 기술의 오묘한 표현을 모바일 환경 내에서 어떻게 재구성할 것인지에 대한 것입니다. 포인팅, 핀치, 줌인 및 스크롤링, 핀치, 줌인 (애플 Vision Pro 상호작용)이 모바일에서 작동하는 메커니즘이되는 가능성을 보여줍니다. 이 제안서는 미래 기술의 과대평가된 약속에 대한 비판으로, 메타버스를 중심으로 한 통합 웹 아트워크 설정에서 대화형 몰입형을 어느 정도까지 구현할 수 있는지에 대한 아이디어를 제안합니다.

모니터 4대와 모바일 기기 1대를 통해, 청중을 중심으로 360도 환경을 구축합니다. VR이나 AR과 같은 전통적인 HMD 체험과 비교해 보면, 이 환경은 실제 배경을 강화시킵니다. 청중은 QR 코드를 스캔하여 사용자 맞춤형 웹사이트에 접속함으로써 환경과 상호작용하며, 이 웹사이트는 실시간으로 스크린과 연결되어 모바일 상호작용에 따라 스크린의 컨텐츠를 동시에 제어합니다. 이렇게 함으로써, 멀티 디바이스 웹 아트워크 내에서 VR 월드를 구축하는 기존의 관행과 규범을 해체하여 새로운 매체를 제공함과 동시에, 개발자, 디자이너, 예술가, 그리고 관객들 모두가 가상세계 내에서의 대화형 몰입에 대해 다시 한번 생각해볼 수 있는 기회를 제공합니다.

이 메타버스는 미래 기술을 포장하는 화려한 형용사들에 대한 비판적인 시각을 제공합니다. 퇴폐적 자본주의와 오는 것으로 예상되는 기술들의 광란의 약속들이 어떻게 서로 연결되어 있는지를 보여주는 동시에, 이 프로젝트는 각각의 기술에 대해 더욱 신중하게 접근하는 것을 주장합니다. 이러한 접근법은 이 프로젝트가 예술적 관점에서 대화형 몰입에 대한 개념을 연구하는 방식과 유사합니다.

이 프로젝트는 각기 다른 상황에 대한 논의를 위해 400개의 회의실을 포함한 가상 세계 구조를 제안합니다. 각 회의실은 "I"로 시작하는 형용사(Interactive-Immersive-Inspirational-Intelligent 등)와 기술(Blockchain-NFT-Web3 등)이 교차하는 지점에 위치해 있습니다. 이와 같은 구조를 통해 특정 기술에 대한 미래의 약속들을 더욱 깊게 이해하고 논의하는 데 도움이 될 것입니다.

마지막으로, 이 프로젝트는 Next.js와 React.js를 프런트엔드 프레임워크로 사용하고, 가상 세계는 Three.js를 통해 구축됩니다. 기기간 상호작용은 WebSocket을 통해 시행되며, 모바일 상호작용을 통해 실시간으로 모니터 장면을 제어합니다.

Tags

Interaction Design

Immersive Design

Web Development

Metaverse

Interactive Art

Virtual Reality

Augmented Reality

WebXR

Web3

Blockchain

NFT

AI

Machine Learning

IoT

Criticism

Dadaism

Interactive Immersiveness

Multi-Device Web Artwork

Text written by Jeanyoon Choi

Ⓒ Jeanyoon Choi, 2024